Sebastian Marwecki

Software Engineer at

2016- 2020 Ph.D. with Patrick Baudisch at Hasso Plattner Institute, Potsdam University

2019 Internship at Microsoft Research with Andy Wilson

PhD Research (2016-2020): Virtualizing Physical Space

The goal of my research is to allow virtual reality experiences to be run in arbitrary tracking volumes and with arbitrary physical objects.

VR experiences today are designed with a specific tracking volume and objects in mind, such as “square 5x5m space and a rubber handheld”. This prevents experiences from running in tracking volumes of smaller size or different shape or with different objects, making it impossible to share experiences, especially with home users.

I address this by creating an abstraction between VR applications and the space they are using. Instead of accessing space and physical objects directly, in my system applications express their needs in an abstract way, which my systems then maps to the space and physical objects currently available to the user. This virtualization of physical space allows VR applications to run on a much wider range of installations.

I dissected this problem within various projects, listed below in detail: VirtualSpace is a software system that allows multiple users immersed in different environments to co-use the same space for real-walking in VR. Similar, but for objects, is Mise-Unseen, a tool that allows multiple virtual objects to map onto the same physical prop. Scenograph runs VR experiences with non-linear storylines across arbitrary tracking volumes. Stuff-Haptics extends this to also include passive haptic props by procedurally generating content of a non-linear experience onto the room's geometry.

Virtualizing physical space would have substantial commercial impact, as the proliferation of real-walking VR is currently hindered by developers’ reluctance to require users to have space and objects.

Full Papers at ACM CHI and ACM UIST

8. Scenograph: Fitting Real-Walking VR Experiences into Various Tracking Volumes

Sebastian Marwecki, and Patrick Baudisch. Full Paper and Demo at UIST '18

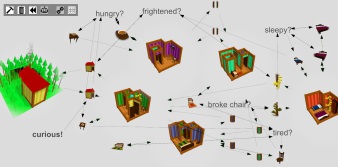

When developing a real-walking virtual reality experience, creators generally design virtual locations to fit a specific tracking volume. Unfortunately, this prevents the resulting experience from running on a smaller or differently shaped tracking volume. To address this, we present a software system called Scenograph. The core of Scenograph is a tracking volume-independent representation of real-walking experiences. Scenograph instantiates the experience to a tracking volume of given size and shape by splitting the locations into smaller ones while maintaining narrative structure.

PDF | TEASER | VIDEO | TALK | PROJECT PAGE | SOURCE CODE

7. Mise-Unseen: Using Eye-Tracking to Hide Virtual Reality Scene Changes in Plain Sight

Sebastian Marwecki, Andrew D. Wilson, Eyal Ofek, Mar Gonzalez Franco, and Christian Holz. Full Paper and Demo at UIST '19

We present Mise-Unseen, a software system that applies covert scene changes inside the user’s field of view. Mise-Unseen leverages gaze tracking to create models of user attention, intention, and spatial memory to determine if and when to inject a change. We present seven applications of Mise-Unseen (i) to hide that task difficulty is adapted to the user, (ii) to adapt the experience to the user’s preferences, (iii) to time the use of low fidelity effects, (iv) to detect user choice for passive haptics even when lacking physical props, (v) to sustain physical locomotion despite a lack of physical space, (vi) to reduce motion sickness during virtual locomotion, and (vii) to verify user understanding during story progression.

PDF | VIDEO | TALK | ACM DL | MICROSOFT PROJECT PAGE

6. VirtualSpace - Overloading Physical Space with Multiple VR Users

Sebastian Marwecki, Maximilian Brehm, Lukas Wagner, Lung-Pan Cheng, Florian 'Floyd' Mueller, and Patrick Baudisch. Full Paper at CHI '18

Although virtual reality hardware is now widely available, the uptake of real walking is hindered by the fact that it requires often impractically large amounts of physical space. To address this, we present VirtualSpace, a novel system that allows overloading multiple users immersed in different VR experiences into the same physical space. VirtualSpace accomplishes this by containing each user in a subset of the physical space at all times, which we call tiles; app-invoked maneuvers then shuffle tiles and users across the entire physical space. This allows apps to move their users to where their narrative requires them to be while hiding from users that they are confined to a tile. We show how this enables VirtualSpace to pack four users into 16m2.

PDF | VIDEO | ACM DL | PROJECT PAGE | SOURCE CODE

5. Haptic PIVOT: On-Demand Handhelds in VR

Robert Kovacs, Eyal Ofek, Mar Gonzalez Franco, Alexa Fay Siu, Sebastian Marwecki, Christian Holz, and Mike Sincair. Full Paper at UIST '20

We present PIVOT, a wrist-worn haptic device that renders virtual objects into the user's hand on demand. Its simple design comprises a single actuated joint that pivots a haptic handle into and out of the user's hand, rendering the haptic sensations of grasping, catching, or throwing an object anywhere in space. Unlike existing hand-held haptic devices and haptic gloves, PIVOT leaves the user's palm free when not in use, allowing users to make unencumbered use of their hand. PIVOT also enables rendering forces acting on the held virtual objects, such as gravity, inertia, or air-drag, by actively driving its motor while the user is firmly holding the handle. When wearing a PIVOT device on both hands, they can add haptic feedback to bimanual interaction, such as lifting larger objects.

4. iTurk: Turning Passive Haptics into Active Haptics by Making Users Reconfigure Props in Virtual Reality

Lung-Pan Cheng, Li Chang, Sebastian Marwecki, and Patrick Baudisch. Full Paper and Demo at CHI '18

We present a system that complements virtual reality experiences with passive props, yet still allows modifying the virtual world at runtime. The main contribution of our system is that it does not require any actuators; instead, our system employs the user to reconfigure and actuate otherwise passive props. We demonstrate a foldable prop that users reconfigure to represent a suitcase, a fuse cabinet, a railing, and a seat. A second prop, suspended from a long pendulum, not only stands in for inanimate objects, but also for objects that move and demonstrate proactive behavior, such as a group of flying droids that physically attack the user. Our approach conveys a sense of a living, animate world, when in reality the user is the only animate entity present in the system, complemented with only one or two physical props.

PDF | VIDEO | ACM DL | PROJECT PAGE

3. DualPanto: A Haptic Device that Enables Blind Users to Continuously Interact with Virtual Worlds.

Oliver Schneider, Jotaro Shigeyama, Robert Kovacs, Thijs Jan Roumen, Sebastian Marwecki, Nico Boeckhoff, Patrick Baudisch. Full Paper and Demo at UIST '18

We present a new haptic device that enables blind users to continuously track the absolute position of moving objects in spatial virtual environments, as is the case in sports or shooter games. Users interact with DualPanto by operating the me handle with one hand and by holding on to the it handle with the other hand. Each handle is connected to a pantograph haptic input/output device. The key feature is that the two handles are spatially registered with respect to each other. When guiding their avatar through a virtual world using the me handle, spatial registration enables users to track moving objects by having the device guide the output hand. This allows blind players of a 1-on-1 soccer game to race for the ball or evade an opponent; it allows blind players of a shooter game to aim at an opponent and dodge shots.

PDF | VIDEO | ACM DL | PROJECT PAGE

2. Mutual Human Actuation

Lung-Pan Cheng, Sebastian Marwecki, and Patrick Baudisch. Full Paper and Best Demo Award at UIST '17

PDF | VIDEO | ACM DL | PROJECT PAGE

1. Providing Haptics to Walls and Heavy Objects in Virtual Reality by Means of Electrical Muscle Stimulation

Pedro Lopes, Sijing You, Lung-Pan Cheng, Sebastian Marwecki, and Patrick Baudisch. Full Paper and Demo at CHI '17

In this project, we explored how to add haptics to walls and other heavy objects in virtual reality. Our main idea is to prevent the user’s hands from penetrating virtual objects by means of electrical muscle stimulation (EMS). Figure 1a shows an example. As the shown user lifts a virtual cube, our system lets the user feel the weight and resistance of the cube. The heavier the cube and the harder the user presses the cube, the stronger a counterforce the system generates. Figure 1b illustrates how our system implements the physicality of the cube, i.e., by actuating the user’s opposing muscles with EMS.

PDF | VIDEO | ACM DL | PROJECT PAGE

Prior Publications

MA Thesis: game mechanics for motor rehab tools

Sebastian Marwecki, Belén Ballester, Esther Duarte, Paul Verschure. Journal of Disability and Rehabilitation '17.

For my master thesis at the Universitat Pompeu Fabra in Barcelona, Spain, I focused on Virtual Reality applications for motor relearning, which benefit stroke therapy. In a study participants were to perform reaching tasks within a gamified context while being provided with feedback towards self-comparative mastery and other-comparative ego goals. This work is published at the Journal of Disability and Rehabilitation. My master was funded by the German Academic Scholarship Foundation.

BA Thesis: games for CBT in groups of children with autism

Sebastian Marwecki, Roman Rädle, and Harald Reiterer. WIP at CHI '13 EA

For my bachelor thesis at the University of Konstanz, Germany, I developed "Invasion of the Wrong Planet" - a game played on a hybrid interactive surface for cognitive-behavioral therapy for children with conditions in the autistic spectrum. This project has been awarded with the Karl-Steinbuch Stipendium by the MFG Stiftung Baden-Württemberg and the VEUK award of the University of Konstanz. I presented this work at CHI '13 and '14 as a Workshop and WIP.

VIDEO 1 | VIDEO 2 | ACM DL | THESIS | SOURCE CODE

Other Projects

2. Stuff-Haptics: Stories with Passive Haptics for Limited, Uncurated Sets of Physical Props

Master Thesis with Moritz (winter '18-'19), and Master Project (summer '17) with Klara, Max, Alex, Jan

We present Stuff-Haptics, a software tool that provides consistent storylines across limited, uncurated sets of physical props. Unlike traditional passive haptics experiences, Stuff-Haptics does not require the set of physical props to be premeditated, and allows experiences to run anywhere, specifically in users’ homes. Stuff-Haptics accomplishes this with a prop-independent representation of the storyline, i.e., the narrative structure of events and the layouting rules for mapping of virtual content onto the available physical prop set. Stuff-Haptics lays out the virtual objects onto an annotated scan of the stuff in users homes. Given limited sets of props, Stuff-Haptics offers different solutions to best preserve the experience, either through re-use of props or by pruning the storyline to still fit the most important story elements. In our user studies, Stuff-Haptics both successfully ran the same experience for different users’ prop sets and ran different passive haptics experiences on the same prop set.

1. Games With a Physical Purpose

Project Seminar (summer '19) with Tom and Erik

We propose a new genre of mixed reality games, that make users perform physically demanding tasks as a byproduct of game play. We illustrate this at the example of Tower Pretense, a tower defense style game that as a side effect makes users move their household. We explain the design of such games that (1) have a consistent virtual game world, (2) match the physical world (such as users vacuuming their floor while virtually they are curling on ice), and (3) fulfill a purpose (such as cleaning windows and whiteboards while virtually chopping up fruits). Our main contribution is that we put mixed reality gamers to productive use. Participants of our user study enjoyed moving heavy boxes more when immersed in Tower Pretense (5.9 vs. 2.1/7) and they were less aware of the physical labor they performed (4.4 vs. 6.9/7).